Goodreads

HEURISTIC EVALUATION | COMPETITIVE ANALYSIS | USER EVALUATIONS | SUMMARY OF FINDINGS

Overview: This case study details my collaboration with a Research and Insights Team focused on assessing the Goodreads app. Through a series of structured evaluations and user testing methodologies, we identified key areas for improvement and developed a prototype to enhance user experience.

Role: UX Researcher, UX/UI Designer

Toolkit: Figma, FigJam, Maze, UserTesting, Optimal Workshop

Context

Mission StatementOtis Chandler, Co-Founder of Goodreads, envisioned the platform as a space where readers could explore their friends' bookshelves and exchange opinions about literature.

For numerous users, this app serves as their primary (and sometimes sole) method to manage their book collections, uncover new reads, and engage in discussions with fellow readers.

Four Key Principles for GoodreadsFRIENDSTRACKINGRECOMMENDATIONSBOOKFITUsers can track their currently reading book progress and books to read later.

Users have a place in the app to view their friend’s books.

Users can determine if a book fits their mood based on community reviews.

Goodreads recommend books to users based on personal settings.

Client Problem: Customers Are Demanding an Update

Despite Goodreads' intentions to create the ultimate tool for readers, just within the last month, numerous complaints have flooded Goodreads' App Store reviews, emphasizing a critical need for an update.

Main Objective: Improve Customer Satisfaction

Currently Goodreads has a 73 CSAT rating, and the purpose of this research is to propose new solutions to help increase customer’s satisfaction with the app to keep them more engaged.

We started our research with a heuristic evaluation of the Goodreads interface to identify design issues. This provided a foundational understanding of the app before we conducted the competitive analysis.

Heuristic Evaluation

ObjectiveIdentify the top usability issues in the current Goodreads app experience that makes it difficult for people to use.

MethodologyUsed industry standard usability heuristics (Nielsen’s 10 usability heuristics) to identify the pain points in the flow.

We also constructed and utilized a severity categorization to help prioritize usability issues

Blended UX Approach: A Heuristic Evaluation Told by Scenarios

Scenarios can bring usability issues to life!

To highlight the impact of usability issues on Goodreads' users, this study will present the heuristic evaluation using a persona and various scenarios. This approach aims to offer additional context and enhance understanding of task-oriented challenges.

Meet Jamie: Our Goodreads Persona

Heuristic Evaluation Walkthrough

As we went through the evaluation, we analyzed Jamie's Goodreads experience across six key tasks using Nielsen's usability principles.

1. Organizing her current book collection

2. Adding her progress statuses for books

3. Searching for a reading community group

4. Adding a friend to her account

5. Exploring reviews for a romance novel

6. Leaving a comment on someone’s review

Main Takeaway: Goodreads Must Work on Rebuilding Trust With Its Users

As evidenced by our scenario with Jamie, there are numerous pitfalls that Goodreads users can fall into that erode their trust in the platform. There are four catastrophic issues that require immediate attention to restore customer faith:

1. Difficulties understanding invite statuses

2. It’s hard to understand how to edit or delete comments

3. There is no way to tell what books are shared publicly

4. There is no help in finding a reading group

We also found 3 major problem areas across the app experience corresponding with certain heuristics.

After we got a general idea of the key areas of friction in the Goodreads app, we entered the next stage which was our competitive evaluation.

Competitive Evaluation

ObjectiveUnderstand the competitive landscape and Goodreads’ place within the market to improve its positioning

Methodology3 direct competitors, 2 indirect competitors and 3 influencers were chosen for their similarities and differences in target audience and value proposition.

Our Competitors

Among direct competitors with similar value propositions and target audiences were Fable, Storygraph, and TBR Bookshelf.

In addition, we analyzed Apple Books and Likewise as indirect competitors, noting their comparable core features but wider audience appeal.

Lastly, we looked for inspiration from Spotify, TikTok, and Playstation to explore innovative approaches for enhancing Goodreads features.

Snapshot: Competitive Evaluation Walkthrough

Our evaluation of each of the 8 brands involved three stages:

High level brand overview

UX evaluation of three key functions (discovering books, viewing books, organizing books)

Analysis of specific competitor features, including recommendations for how Goodreads can implement them

So What Does It All Mean?

After analyzing the evaluation data, we positioned each brand on a spectrum ranging from poor to best examples for each key function and feature and found 2 main takeaways.

Goodreads performs about mid-tier level on general user tasks (discovering, viewing, and organizing books.

Goodreads is falling behind in its feature capabilities (community, customization, and unique features).

Recommendations and Strategy

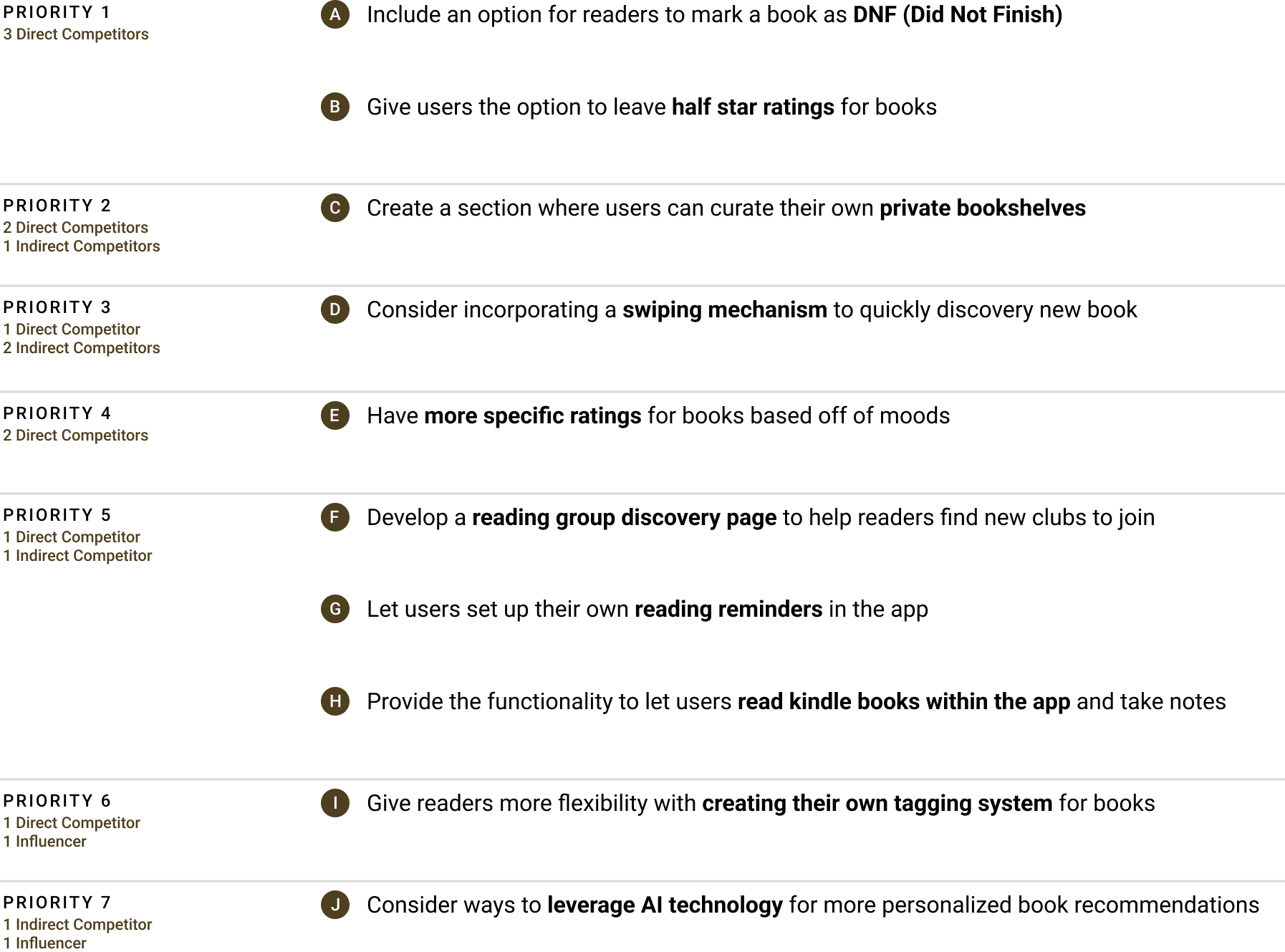

Potential New FeaturesOur team devised a list of 10 potential new features that Goodreads should consider incorporating based on what its competitors and influencers offer.

These features are prioritized into categories based on the number of competitors and influencers offering similar functionality.

UI RecommendationsWe also highlighted some minor UI adjustments that can be swiftly addressed to enhance the overall experience. Drawing from the UX evaluations, here are five UI recommendations for prompt updates.

How to WinGoodreads can tap into an unmet market need by prioritizing connections between authors and readers. By becoming a hub for authors to showcase their work and build a following, Goodreads can address writers' publicity challenges. Utilizing insights from our researched influencers will foster meaningful author-reader relationships and strengthen the community aspect central to Goodreads' mission.

Here are encouraged next steps for Goodreads to action on. Due to time and resource constraints we proceeded with the medium-term strategy.

After completing both expert evaluations, our team was left with two crucial questions to solve.

What new features would people find to be the most valuable for the app?

How can we integrate these new features into the current Goodreads’ experience to enhance customer satisfaction?

We devised a three-stage iterative research approach to tackle this new feature implementation project.

Employed a closed card sort method to determine which features were the most beneficial ones to focus on. Our team then prototyped these new features.

Conducted a qualitative usability test where Goodreads users provided us with feedback on the new feature offerings. We used their feedback to revise our prototypes.

Utilized a summative quantitative usability test to validate our design choices and identify any further areas for exploration.

Closed Card Sort

ObjectiveWe utilized the card sort method in an unconventional way to determine which features users prioritize as the most beneficial for integration with the app.

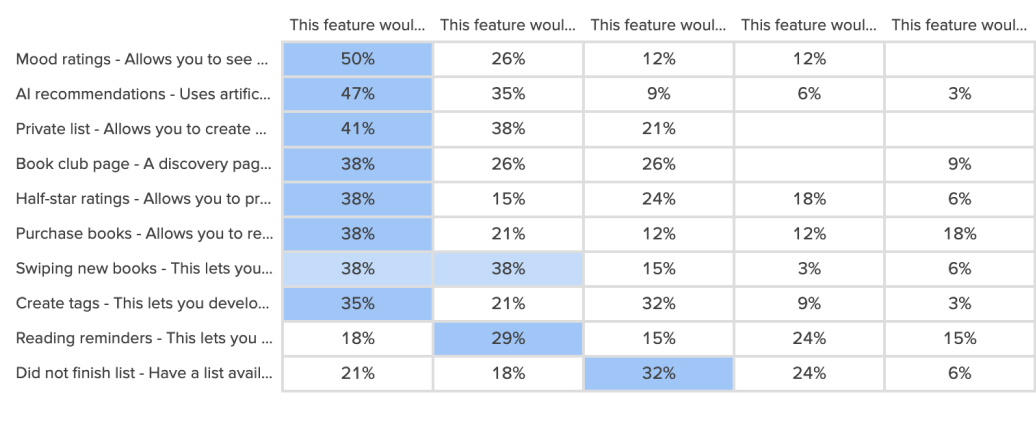

MethodologyWe presented 10 cards showcasing potential features identified from the competitive analysis and asked customers to categorize them into predetermined desirability categories ranging from Extremely Beneficial to Detracting from the App.

Sample• 34 participants

• Mix of active users, prior users and non-users

• The majority of participants were active readers

High Level Takeaways

From the 10 features we tested, we discovered three features that were most often classified as extremely beneficial.

Diving into the Initial Feature Prototypes

We created a simple toggle for users to make their bookshelves private.

This addressed a key finding from our heuristic evaluation, which revealed that users lacked clarity on whether their lists were public.

Based on the consistency and standards heuristic, we added a lock icon to indicate private lists and a global icon to represent public lists.

We enhanced the "add a review" flow to allow users to leave a mood rating.

Inspired by our heuristic evaluation, we improved button feedback by highlighting clickable buttons in green to encourage engagement; once clicked, the button turns brown to confirm the action.

We ensured consistency in language by using "moods" throughout the flow, avoiding other terms like "feelings" or "emotions."

We introduced the AI Librarian feature to enhance book discovery.

Our competitive analysis revealed that while discovering books is a high priority for users, the app performed average. To improve this, we prioritized the AI Librarian by giving it a dedicated space in the navigation bar.

Drawing from ChatGPT, we incorporated suggested prompts for users and enabled the AI Librarian to auto-generate recommendations that can be quickly added to their shelf.

Qualitative Usability Test

ObjectiveIdentify the primary usability challenges in the initial prototype to guide iterative enhancements.

MethodologyParticipants were asked to speak out loud about any initial thoughts that come to their mind as they complete the following tasks:

Create a new private bookshelf

Leave a joyful mood rating for a book

Receive a book recommendation

They also received a series of follow-up questions to determine how the experience was.

Sample• 3 participants

• All participants were active readers whose use the Goodreads app

High Level Takeaways

All three participants were able to accomplish each task successfully and rated every task as satisfactory, providing some positive feedback.

Deprioritizing Private Bookshelves

Since the private bookshelf feature was rated the least beneficial and received minimal constructive feedback, we decided to remove it from the scope of this redesign. Instead, we will focus on improving the mood rating and AI recommendations features.

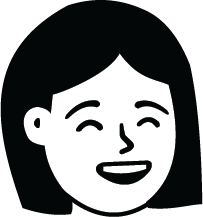

Mood Rating Prototype Updates

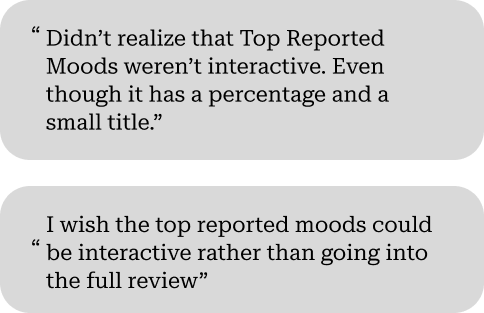

User Feedback

Prototype Updates

Separate star ratings and mood ratings into different flows.

User Feedback

Prototype Updates

Turned the boxes into tags with a shortcut for people to tap on them to easily add in their mood.

Book Recommendations Prototype Updates

User Feedback

Prototype Updates

Updated the icons to reflect the reference to a “Librarian” indicating to users that the AI can provide recommendations.

User Feedback

Prototype Updates

Added a banner to the Home page that describes what the AI Librarian does.

Made language consistent in the app (e.g. Change “recommend” to “promotion”).

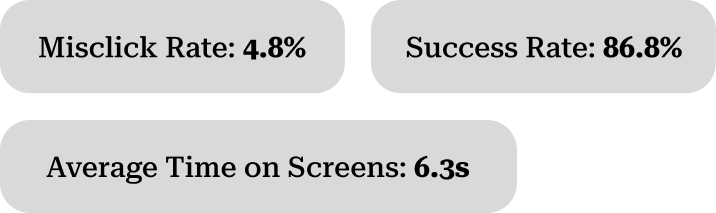

Quantitative Usability Test

ObjectiveEvaluate the effectiveness of the new features after the first round of iterations.

MethodologyParticipants were asked to complete the following two tasks with the prototype:

Leave a general mood rating for a book

Find a recommendation for a specific type of book

After each tasked they were asked to rate how easy or difficult it was to complete the task. At the end of the survey, we had them rate their overall satisfaction with the new features to measure CSAT.

Sample• 33 participants completed the study

• Mix of participants both familiar and unfamiliar with the Goodreads app.

High Level Takeaways

To measure ease of use ratings, we asked people “How easy or difficult did you feel it was to compete this task?”

Mood Rating Stats

Book Recommendation Stats

The AI librarian feature would require a shift in people’s current mental models of how they receive book recommendations.

Users acknowledge that AI can help them with eliminating the burden of manual searching, but further research would need to be done here to explore how we can shift their mental models to using AI for book recommendations.

Summary of Learnings

Heuristic Evaluation: Goodreads has poor feedback, a lack of error prevention, and a mismatch in copy.

Competitive. Evaluation: Goodreads currently lags behind in it’s feature offerings. As a result, we've identified 10 possible new features.

Closed Card Sort: After conducting the card sort on the list of 10 features, we discovered that people rated mood ratings, AI recommendations, and private bookshelves as the most beneficial ones.

Qualitative Usability Test: We built prototypes of the top 3 features and discovered quick wins to address, such as separating mood ratings from star ratings and updating the iconography of the AI librarian.

Quantitative Usability Test: The final test revealed majority of users found the features relatively user-friendly and we received positive feedback. Mood ratings performed exceptionally well, while AI book recommendations needs some further exploration in shifting mental models.